Hi, not sure if I understand correctly but I’ve read that PHD2 guiding is done by dithering between exposures, (unfortunately I’m not too sure what that really is!!). How does this work if I’m doing 5 minute exposures? Seems a long time!!! or do I use it like normal with my QHY 5 11m guide camera through SGpro?

Hope someone can explain. Apart from that, I’m finding SGpro absolutely brilliant.

Kind regards,

P.

If you are doing a 5 minute exposure PHD will dither BETWEEN exposures. This small movement means that it is easier for you to combat noise in your final image. You can set the size of your dither in SGP (large dither for shorter focal length scopes and a small dither for longer focal lengths) - You can also set it how often you want it to dither, ie after every sub or every x subs.

Hope that make sense

I was just thinking, ‘Lordy, I still don’t understand this, but one day I’ll think, aaahhhh’. Well, that’s just happened, (I think). Am I right in thinking that the guiding is done with the guide scope, as normal. The dithering is an extra to reduce noise.

P.

Every camera has some noise that is “dedicated to a given pixel” (over-simplification so don’t jump on me). By shifting which pixel a given object sits on in each sub-exposure, the noise can be reduced in the final image. Of course, dithering MUST be combined with registering each sub-exposure to the stars when processing the sub-exposures to the final image. Dithering is critical to a good deep sky image, IMHO. I typically dither between each exposure (some disagree and I will not argue the point - doing this since the 1990’s has convinced me).

LIke CCDMan said, dithering is used to randomize the noise that is inherent in your camera. If you didn’t dither you would just be stacking noise on noise and it would be amplified (not good).

PHD2 handles the actual movement of the dither but SGP tells PHD2 when to dither. Essentially a frame finishes in SGP and then SGP tells PHD2 to dither. Once PHD2 is done it tells SGP that the movement is complete and that you’re guiding again and then SGP starts another image.

You can control the amount of dither that SGP will request on the Auto Guide Tab. If you have a long focal length imaging scope you probably want a small amount of dither. If you have a very wide field imaging scope you want a lot of dither.

Hope that helps,

Jared

Thanks Jared,

That has really explained it, I just never knew. I’ve been doing imaging for about 3 years now and I’m still learning. Potentially a very complicated subject and a very steep learning curve.

I never dithered with my Canon 40D, just took images through APT. I wonder what they would have come out like if I’d known!!!

Thanks again,

P.

The concept of dithering became obvious to me after I took several images that were being dithered and used the Blink process from PixInsight to examine the images. What I saw were the astronomical objects (stars, galaxy etc.) moving around as the images blinked, while the bright pixels stayed in the same location. This demonstrated that when the images are stacked and aligned, the bright and dark pixels will not be aligned on top of each other from frame to frame.

Fred

Thanks Fred, yes, it all seems so logical now.

How do you find PixInsight? I’ve tried a couple of processing programs but now I’m seriously looking for something a bit more versatile.

Kind regards,

P.

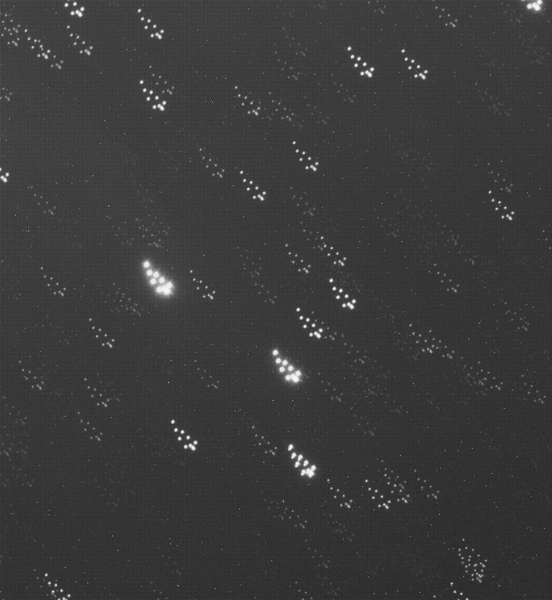

I thought a couple of picture might shed some light on this discussion.

The first image is an “integration” of ten dithered subs BEFORE the stars have been “registered”. As you can see, each image is displaced slightly from the previous image. If you look closely, you can see hot pixels that are physically on the sensor. (You may need to enlarge the image to see the hot pixels.)

The second image is an “integration” of the subs AFTER they have been registered. Now the stars are all aligned, but the hot pixels have been clipped out of the image.

Note: The raw data seen here hasn’t had darks, bias, and flat frames subtracted. Many of the dark pixels would have been removed during the “calibration” processes.

Thanks Rob, yes, I see now exactly what it does. Clever stuff. It just goes to show that although the digital age has opened up so much to so many, it also creates its own inherent problems. But, yes, sorted.

Thanks again,

P.

I am fairly new to PixInsight. I was having a hard time learning the basic processes until I bought Warren Keller’s book “Inside PixInsight” . His book got me over the hump. I am still far from an expert. However, I am getting good results with PI.

Fred